Table of Contents

PCI X Definition

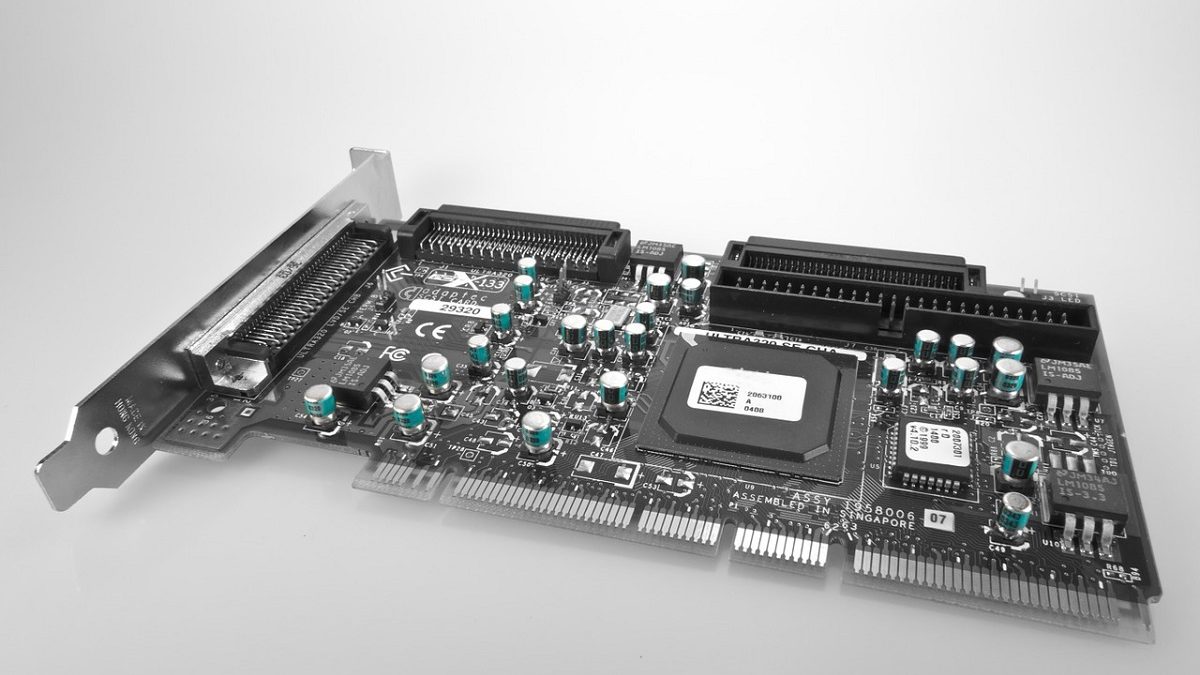

PCI-X is known as Peripheral Component Interconnect eXtended, is an expansion card standard.

Servers and workstations demand a 32-bit PCI local bus.

It uses a modified protocol to maintain higher clock speeds (up to 133 MHz) otherwise similar in electrical implementation.

PCI-X 2.0 added gears up to 533 MHz, with a reduction in electrical signal levels.

fully specifies for both 32- and 64-bit PCI connectors and PCI-X 2.0 added a 16-bit variant for embedded applications.

In modern designs, It is replaced by the usual-sounding PCI Express ( abbreviated as PCIe), with a different inclusive connector and a very unusual electrical design, having one or more narrow.

But fast serial connections lanes instead of several slower connections in parallel.

What is History?

Background and motivation

In PCI, a transaction that cannot complete immediately postpones either the target or the designer issues retry-cycles, throughout which no other agents will be able to use the PCI bus.

Given that PCI lacks a split-response mechanism to permit the goal to return data at a later time, the coach remains occupied by the goal issues retry-cycles in anticipation of the data is all set.

In this, after the master issues request, it disconnects from the PCI bus, allowing other agents to use the bus. The split-response containing the request data generates when the target is ready to return all the data.

Split-responses eliminates retry-cycles by the increase of bus efficiency. During which no data can transfer across the bus.

PCI-X I/Os to the PCI clock. The need for registered I/Os limited PCI to a maximum frequency of 66 MHz. Usually utilizing a PLL, to keenly control I/O holdup the bus pins.

The increase in rate to 133 MHz allows the improvement in setting up the time.

Also Read: What is Fiber Optic [Optical Fiber]? – Definition, Features, Types, Pros, and Cons

What are the types?

PCI-X 1.0

The standard developed jointly by Compaq, IBM, and HP submitted for sanction in 1998.

It was an attempt to codify proprietary server extensions to the PCI local bus to address several shortcomings in PCI and increase in the presentation of high bandwidth devices.

Such as Ethernet, Gigabit, Fibre Channel, and Ultra3 SCSI cards, and allow processors to interconnect in clusters.

The first products of it manufactured in 1998, such as the Adaptec AHA-3950U2B dual Ultra2 Wide SCSI controller.

However, the connector was merely referred to as “64-bit ready PCI” on the packaging, hinting at future-forward compatibility.

PCI-X 2.0

In 2003, the SIG ratify PCI-X 2.0. It adds 266-MHz and 533-MHz variants, compliant roughly 2,132 MB/s and 4,266 MB/s throughput.

PCI-X 2.0 makes bonus protocol revisions that design to help system reliability and add Error-correcting codes to the bus to avoid re-sends.

To deal with one of the most regular complaints of the X factor, the 184-pin connector, 16-bit ports were developed to allow to use in devices with fixed space constraints.

Compared to PCI-Express, PtP function devices were added to talk to each other on the bus without burdening the CPU or bus controller.

In spite of the various imaginary advantages of PCI-X 2.0 and toward the back compatibility with PCI-X and PCI devices, it does not implement on a large scale.

The lack of implementation is because hardware vendors have chosen to integrate PCI Express instead.

Also Read: How to Mitigate Risks from Cyberattacks for your e-Commerce Website

Kamran Sharief

Related posts

Sidebar

Recent Posts

Exploring Different Types of HSM Wallets for Your Needs

Exploring Different Types of HSM Wallets for Your Needs With the rise in popularity of cryptocurrencies, the need for secure…

Empowering Expat Children’s Educational Journey

Helping Children of Ex-pats Continue Their Studies Educational continuity for expatriate children can be maintained through access to effective educational…